For generations before the rise of computing, classicists produced concordances of Latin and Greek authors. Concordances are verbal indices, complete alphabetical lists of words in a text. They typically feature a dictionary headword, followed by every instance of that word in the text, with a citation for each instance. Sometimes the word itself is listed in the form it appears in each location, and sometimes a line or two of context is included as well. Here are some examples of this now defunct genre of classical scholarship (Latin only):

- E. B. Jenkins, Index Verborum Terentianus (1932)

- L. Roberts, A Concordance of Lucretius (1968)

- M.N. Wetmore, Index Verbroum Catullianus (1912)

- N.P. MacCarren, A Critical Concordance to Catullus (1977)

- Bennet, Index Verborum Sallustianus (1970)

- M.A. Oldfather et al., Index Verborum Ciceronis Epistularum (1938); Index Verborum in Ciceronis Rhetorica (1964)

- W.W. Briggs et al., Concordantia in Varronis Libros De Re Rustica (1983)

- L. Cooper, A Concordance to the Works of Horace (1916)

- E. Staedler, Thesaurus Horatianus (1962)

- D. Bo, Lexicon Horatianum (1965-66)

- J.J. Iso, Concordantia Horatiana (1990)

- E.N. O’Neill, A Critical Concordance to the Corpus Tibullianum (1971)

There are many others, for Livy, Vitruvius, Lucan, Valerius Flaccus, Statius, Silius Italicus, Manilius, etc. You can find them listed in the bibliographies in Michael Von Albrecht’s Geschichte der römischen Literatur.

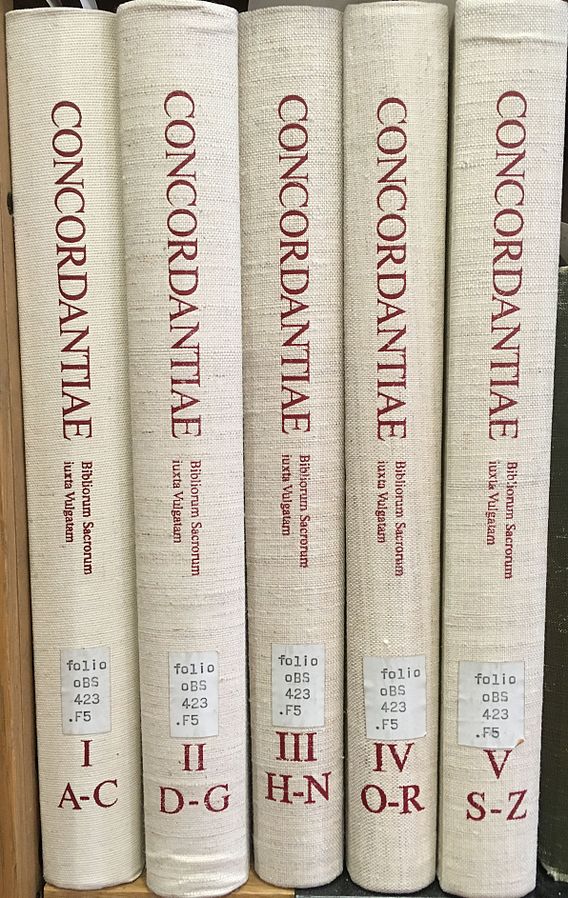

Biblical concordances go back to the early days of printing, and even before. For classical scholars, the tedious work of compiling such concordances was considered helpful as a way of studying the characteristic vocabulary of the authors. Concordances allowed scholars to find parallel passages quickly. They helped translators and commentators by allowing access to a full list of instances of a particular lemma, something dictionaries did not provide. They could also help scholars discern which words did not appear in an author, which words were being avoided. They could help textual critics, too, in their efforts to come up with plausible emendations.

All these functions are of course much easier now with computers. Right? In the case of Latin the answer is, well, sort of. As we all know from using search engines, a computer search for a word does not always bring up just instances of only the word you want (Latin music, anybody?). Many words have homonyms, like wind (air) and wind (turning). Or suppose you wanted to study meanings of the verb “to have” in a corpus of English. You would first have to somehow filter out all instances of “have” used as an auxiliary. Have you thought of that, Google engineers? This “homonym problem” is actually far more pervasive in Latin than in English, and linguistic and lexical analysis is made much easier by a concordance that accurately identifies all the instances of every headword in a text. The correct distinguishing of potential homonyms was real, valuable work, and for all practical purposes work that cannot be readily duplicated by a computer.

In the digital age, this Latin homonym problem severely hampers the accuracy and usefulness of automatic parsers like the Perseus Word Study Tool. Various researchers (e.g., Patrick Burns) are trying to improve the accuracy of automatic parsers through computing techniques and algorithms, contextual analysis, and so forth. Teams all over the world are hand-parsing Latin and Greek texts to tag for part of speech and dictionary headword, as well as syntactical dependency.

Nobody has evidently thought of mining the dozens of existing print concordances which are, in effect, fully parsed texts of classical authors re-ordered by dictionary head word. With enough text processing, these works could be used to create fully parsed texts where each word in the text is paired with its headword, by line or chapter in order of occurrence.

The first job would to round up and digitize the concordances. Then one could work with computer scientists to do the requisite text processing (not trivial by any means), and start producing lemmatized texts as .csv spreadsheets and sharing them with the world. Such a project would rescue these old scholarly products and redeem the thousands and thousands of scholarly hours spent producing them. Having readier digital access to more parsed texts would be useful to

- Any researcher who studies lexical usage and word frequency in classical texts

- The digital project I direct, Dickinson College Commentaries, which features running vocabulary lists, many of them based on fully parsed texts produced by LASLA

- Bret Mulligan’s The Bridge, which uses fully parsed texts to allow users to generate accurate vocabulary lists for reading.

For me, the promise of more lemmatized texts means the ability to widen the biggest bottleneck in DCC, the production of accurate vocabulary lists. But such data doubtless has many other uses I have not thought of. Do you have any ideas? If so please share a comment on this post.

Agreed that lemmatization of texts is a very important step. It greatly improves the accuracy of morphological analyses, enables proper searching of texts, improved semantic analysis, and, as you have highlighted, generation of vocabulary lists.

In my own work (which relies on lemmatization for both vocabulary lists and morphological disambiguation), I’m also working on intermediate representations between form and lemma (think principal parts) to better model likely student recognition of forms in the generation of scaffolded readers, etc.

Thank you, James, this is an interesting way to look at the issue. Bret Mulligan and I have been working on author-specific dictionaries as a way of providing that intermediate step between a bare lemma and the full fire hose of Lewis & Short. I’d love to hear more of your thinking on this subject!