A fascinating recent paper by Sarah Abowitz, Alison Babeu, and Gregory Crane, all of Tufts University, asks whether “general advances in machine learning could power alternative digital aids” to reading foreign-language sources more easily, “requiring less labor” than the immense effort required to create traditional print commentaries. In other words, how can we best use technology to transcend the limits of print in helping readers of classical works in the digital age? It’s a question at the heart of DCC, and of the other digital commentary projects they mention, the Ajax Multi-commentary Project and New Alexandria. After surveying classical commentary traditions past and present, print and digital (with some very kind words about DCC) they discuss a study conducted on sample commentaries aimed at different audiences on sections from Thucydides’ History and from the Iliad—Book 6 of both works. The authors do not consider the rich tradition of Hebrew and Christian exegesis, but their comments on the classical tradition could perhaps be extended to other traditions as well.

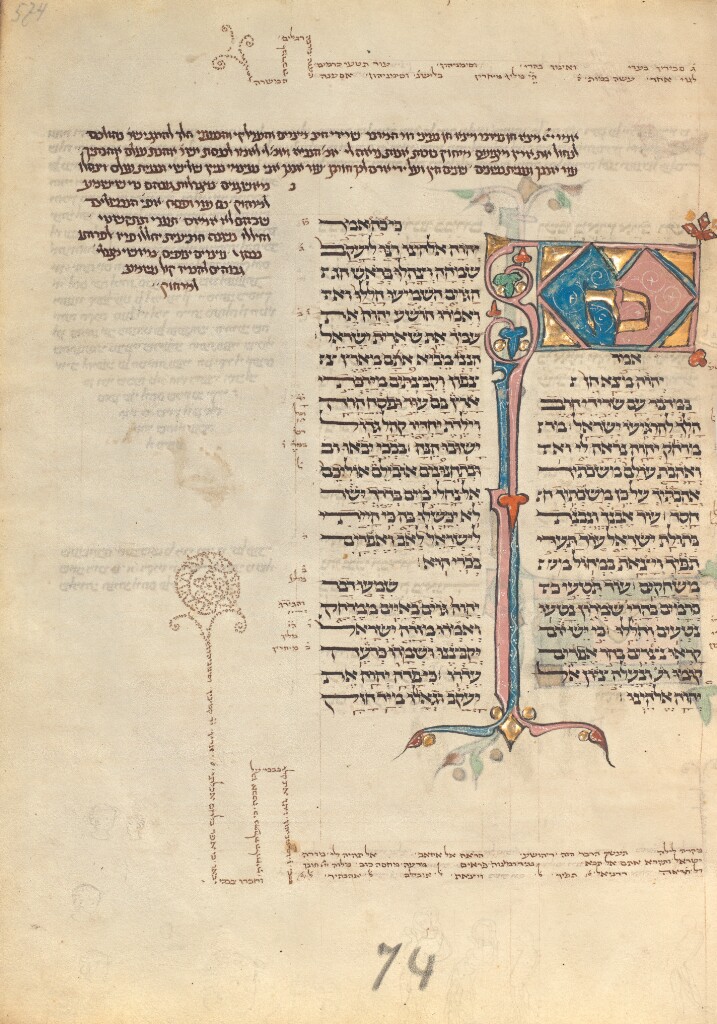

Unknown artist/maker, illuminator, Elijah ben Meshallum, scribe, Elijah ben Jehiel, scribe, et al. Decorated Text Page from the Rothchild Pentateuch, 1296. J. Paul Getty Museum, Los Angeles

For the study, two coders tagged the comments and categorized them by function into six varieties: syntactic aid, translation, semantic aid, inconsistency alerts (which informed the reader of changes the editor made to the original text), stylistic claims (which assert that a certain linguistic feature occurs in a certain way in the text, for example, “this is one of Nicias’ favorite adjectives”), and finally reference pointers, which refer to any specific reference work, primary source, or scholarly paper.

The authors acknowledge that these categories are not watertight, and that quite a few comments straddled more than one of the categories. Unsurprisingly, commentaries that are oriented towards scholars have a higher percentage of reference pointers and less the way of translation and syntactic aids. Those aimed at students have more in the way of syntactic aids and translation. The high number of bare reference pointers that are characteristic of scholarly commentaries (I call them “cf.” notes and attempt to rigorously exclude them from DCC) are often dead ends in an open digital medium, since the material referred to is copyrighted and behind paywalls.

The authors point out that there is very little research about user experience of commentaries, of the kind that would be standard procedure in creating any self-respecting digital interface. What do we really know about how students use commentaries on average, and which types of notes are most helpful? We all have opinions on this topic, but apparently little or nothing has been done formally to investigate the question.

DCC’s practice is now to road-test commentaries with students before publication. In some cases, students are involved in choosing notes from existing public domain commentaries for variorum editions. A new cohort of DCC high school interns is on the way for summer 2025. We’re not gathering any data about student preferences from this process, but we could.

Another interesting point the authors make, coming from a computer science perspective, is that the stylistic claims in commentaries are often based on data (for example the number of occurrences of a given word or phrase), yet classical commenters almost never provide the data to back these assertions up (Some notes in the Cambridge Green & Yellow series do, and Ronald Syme’s Tacitus is a notable pre-digital exception). In a digital medium, it would be quite possible to provide the data, for example using treebank data.

The goals of this kind of discussion, it seems to me, are two: first to serve readers better, and second to use computational means to create more content with less labor. To what extent can artificial intelligence and generative AI aid in this enterprise? This paper suggested to me an interesting approach to testing and moving forward. The creating of a typology of comments is an important advance. This could be refined by analyzing a larger sample of older commentaries, akin to the corpus collected for the Ajax project on Sophocles’ Ajax, though these are all very scholarly, and not very useful for most readers, in my opinion. It would be better to work with school commentaries where multiple parallel school commentaries exist, say Caesar, Cicero, or Vergil, Xenophon, Homer, or Lysias.

Once a refined and well-understood typology of notes is ready, the types can be evaluated in terms of their relative utility for different audiences, and serve as a basis for creating prompts for generative AI. A specific, well-designed prompt might elicit from AI a certain type of comment, and a combination of those could be used to create a draft commentary on new texts, to be evaluated and edited by humans.

Another key piece of the puzzle is something that Gregory Crane mentioned at the recent SCS panel, the fact that Hathi trust now has marvelously good multilingual OCR in its back end. In theory it will be possible to do a much larger harvesting of existing public domain commentaries, tag and use that data to create a more extensive collection of note types and do a kind of sifting operation in which the users select the notes that they find particularly helpful. The knowledge thus gained could be used on creative AI prompts. Obviously, anything produced by generative AI would have to be extensively edited by humans. But getting us part way would be extremely helpful. My own experiments on generating vocabulary lists with ChatGPT seem promising.