Organizing our received humanities materials as if they were simply informational depositories, computer markup as currently imagined handicaps or even baffles altogether our moves to engage with the well-known dynamic functions of textual works. ((Jerome McGann, A New Republics of Letters: Memory and Scholarship in the Age of Digital Reproduction (Cambridge, MA: Harvard University Press, 2014), pp. 107-108.))

This sentence from Jerome McGann’s challenging new book on digitization and the humanities (part of a chapter on TEI called “Marking Texts in Many Directions,” originally published in 2004) rang out to me with a clarity derived from its relevance to my own present struggles and projects. The question for a small project like ours, “to mark up or not to mark up in XML-TEI?” is an acutely anxious one. The labor involved is considerable, the delays and headaches numerous. The payoff is theoretical and long term. But the risk of not doing so is oblivion. Texts stuck in html will eventually be marginalized, forgotten, unused, like 1989’s websites. TEI promises pain now, but with a chance of the closest thing the digital world has to immortality. It holds out the possibility of re-use, of a dynamic and interactive future.

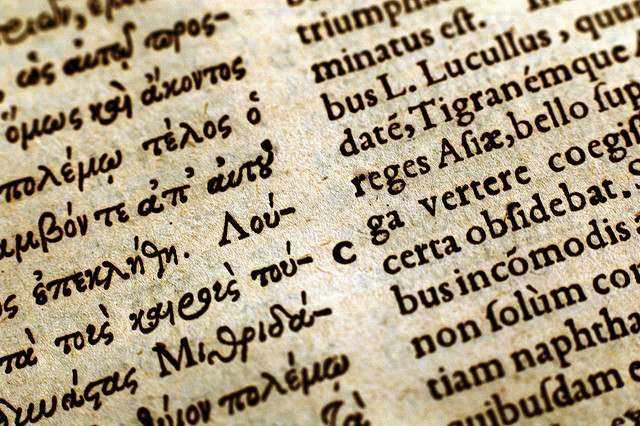

McGann points out that print always has a twin function as both archive and simulation of natural language. Markup decisively subordinates the simulation function of text in favor of ever better archiving. This may be why XML-TEI has such a profound appeal to many classicists, and why it makes others, who value the performative, aesthetic aspects of language more than the archiving of it, so uneasy.

McGann expresses some hope for future interfaces that work “topologically” rather than linearly to integrate these functions, but that’s way in the future. What we have right now is an enormous capacity to enhance the simulation capacity of print via audio and other media. But if we (I mean DCC) spend time on that aspect of web design, it takes time away from the “store your nuts for winter” activities of TEI tagging.

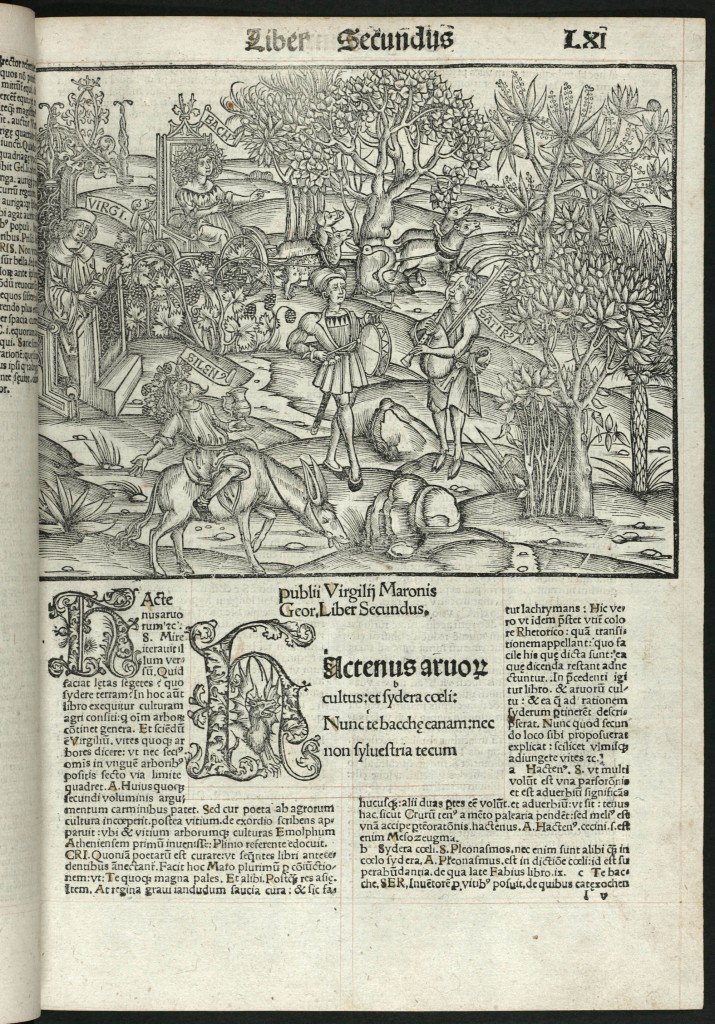

Virgil. Opera (Works). Strasburg: Johann Grüninger, 1502. Sir George Grey Special Collections, Auckland City Libraries

These issues are in the forefront of my mind as I am in the thick of preparations for a new multimedia edition of the Aeneid that will, when complete, be unlike anything else available in print or on the web, I believe. It will have not just notes, but a wealth of annotated images (famous art works and engravings from historical illustrated Aeneid editions), audio recordings, video scansion tutorials, recordings of Renaissance music on texts from the Aeneid, a full Vergilian lexicon based on that of Henry Frieze, comprehensive linking to a newly digitized version of Allen & Greenough’s Latin Grammar, complete running vocabulary lists for the whole poem, and other enhancements.

Embarking on this I will have the help of many talented people:

- Lucy McInerney (Dickinson ’15) and Tyler Denton (University of Kentucky MA ’14) and Nick Stender (Dickinson ’15) will help this summer to gather grammatical and explanatory notes on the Latin from various existing school editions in the public domain, which I will then edit.

- Derek Frymark (Dickinson ’12) is working on the Vergilian dictionary database, digitizing and editing Frieze-Dennison. This will be combined with data generously provided by LASLA to produce the running vocabulary lists.

- Meagan Ayer (PhD University of Buffalo ’13) is putting the finishing touches on the html of Allen & Greenough. This was also worked on by Kaylin Bednarz (Dickinson ’15).

- Melinda Schlitt, Prof. of Art and Art History at Dickinson, will work on essays on the artistic tradition inspired by the Aeneid in fall of 2014, assisted by Gillian Pinkham (Dickinson ’14).

- Ryan Burke, our heroic Drupal developer, is creating the interface that will allow for attractive viewing of images along with their metadata and annotations, a new interface for Allen & Greenough, and many other things.

- Blake Wilson, Prof. of Music at Dickinson, and director of the Collegium Musicum, will be recording choral music based on texts from the Aeneid.

And I expect to have other collaborators down the road as well, faculty, students, and teachers (let me know if you want to get involved!). My own role at the moment is an organizational one, figuring out which of these many tasks is most important and how to implement them, picking illustrations, trying to get rights, and figuring out what kind of metadata we need. I’ll make the audio recordings and scansion tutorials, and no doubt write a lot of image annotations as we go, and do tons of editing. The plan is to have the AP selections substantially complete by the end of the summer, with Prof. Schlitt’s art historical material and the music to follow in early 2015. My ambition is to cover the entire Aeneid in the coming years.

Faced with this wealth of possibilities for creative simulation, for the sensual enhancement of the Aeneid, I have essentially abandoned, for now at least, the attempt to archive our annotations via TEI. I went through some stages. Denial: this TEI stuff doesn’t apply to us at all; it’s for large database projects at big universities with massive funding. Grief: it’s true, lack of TEI dooms the long-term future of our data; we’re in a pathetic silo; it’s all going to be lost. Hope: maybe we can actually do it; if we just get the right set of minimal tags. Resignation: we just can’t do everything, and we have to do what will make the best use of our resources and make the biggest impact now and in the near future.

One of the things that helped me make this decision was a conversation via email with Bret Mulligan and Sam Huskey. Bret is an editor at the DCC, author of the superb DCC edition of Nepos’ Life of Hannical, and my closest confidant on matters of strategy. Sam is the Information Architect at the APA, and a leader in the developing Digital Latin Library Projects which, if funded, will create the infrastructure for digitized peer reviewed critical texts and commentaries for the whole history of Latin.

When queried about plans to mark up annotation content, Sam acknowledged that developing the syntax for this was a key first step in creating this new archive. He plans at this point to use not TEI but RDF Triples, the lnked data scheme that has worked so well for Pleiades and Pelagios. RDF triples basically allow you to say for anything on the web, x = y. You can connect any chunk of text with any relevant annotation, in the way that Pleiades and Pelagios can automatically connect any ancient place with any tagged photo of that place on flickr, or any tagged reference to it in DCC or other text database. I can see how, for the long term development of infrastructure, RDF triples would be the way to go, in so far as it would create the potential for a linked data approach to annotation (including apparatus).

The fact that the vocabulary for doing that is not ready yet makes my decision about what to do with the Aeneid. Greg Crane too, and the Perseus/OGL team at Tufts and the University of Leipzig, are working on a new infrastructure for connecting ancient texts to annotation content, and Prof. Crane has been very generous with his time in advising me about DCC. He seemed to be a little frustrated that the system for reliably encoding and sharing annotations is not there yet, and eager to help us just get on with the business of creating new freely available annotation content in the meantime, and that’s what we’re doing. Our small project is not in a position to get involved in the building of the infrastructure. We’ll just have to work on complying when and if an accepted schema appears.

For those who are in a position to develop this infrastructure, here with my two sesterces. Perhaps the goal is someday to have something like Pleiades for texts, with something like Pelagios for linking annotation content. You could have a designated chunk of text displayed, then off to the bottom right somewhere there could be a list of types and sources of annotation content. “15 annotations to this section in DCC,” “25 annotations to this section in Perseus,” “3 place names that appear in Pleiades,” “55 variant readings in DLL apparatus bank,” “5 linked translations available via Alpheios,” etc., and the user could click and see that content as desired.

It seems to me that the only way to wrangle all this content is to deal in chunks of text, paragraphs, line ranges, not individual words or lemmata. We’re getting ready to chunk the Aeneid, and I think I’m going to use Perseus’ existing chunks. Standard chunkings would serve much the same purpose as numeration in the early printed editions, Stephanus numbers for Plato and so forth. Annotations can obviously flag individual words and lemmata, but it seems like for linked data approaches you simply can’t key things to small units that won’t be unique and might in fact change if a manuscript reading is revised. I am aware of the CTS-URN architecture, and consider it to be a key advance in the history of classical studies. But I am speaking here just about linking annotation content to chunks of classical texts.

What Prof. Crane would like is more machine operability, so you can re-use annotations and automate the process. That way, I don’t have to write the same annotation over and over. If, say, iam tum cum in Catullus 1 means the same thing as iam tum cum in other texts, you should be able to re-use the note. Likewise for places and personal names, you shouldn’t have to explain afresh every time which one of the several Alexandrias or Diogeneses you are dealing with.

I personally think that, while the process of annotation can be simplified, especially by linking out to standard grammars rather than re-explaining grammatical points every time, and by creating truly accurate running vocabulary lists, the dream of machine operable annotation is not a realistic one. You can use reference works to make the process more efficient. But a human will always have to do that, and more importantly the human scholar figure will always need to be in the forefront for classical annotation. The audience prefers it, and the qualified specialists are out there.

This leads me to my last point for this overlong post, that getting the qualified humans in the game of digital annotation is for me the key factor. I am so thrilled the APA is taking the lead with DLL. APA has access to the network of scholars in a way that the rest of us do not, and I look forward to seeing the APA leverage that into some truly revolutionary quality resources in the coming years. Sorry, it’s the SCS now!